Emergent Model Capabilities

This thought was heavily inspired by this video

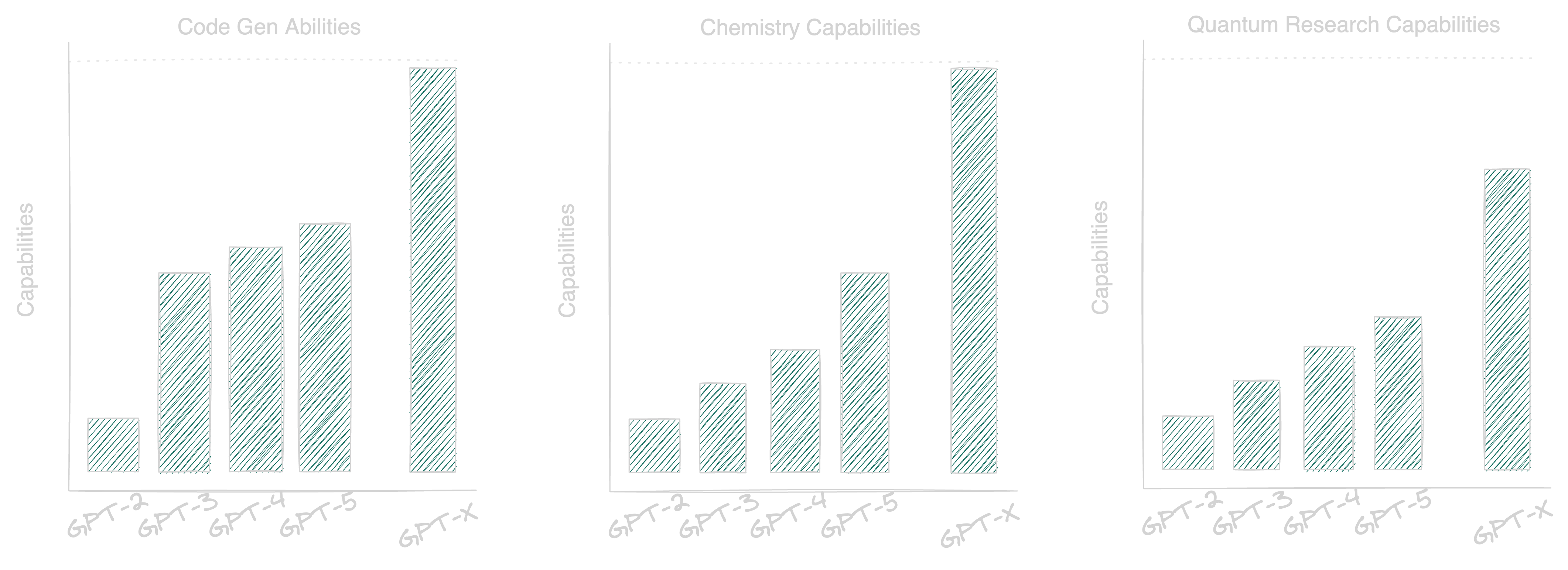

The below graph perfectly describes how model’s emergent abilities work. Nobody thought that the first few use cases of LLMs would largely be focused on knowledge work (code generation, coherent text generation), but it turns out LLMs are really good at that. In terms of capabilities, it’s hard to know when a model is progressing linearly vs. when a model is about to progress exponentially. We know that for GPT 3.5 → GPT 4, the progress in code generation was somewhat linear, but for example, maybe GPT-5 will be incredible at chemistry and we’re on the verge of that already, and maybe it’ll take until GPT-10 for a model to do truly novel research in a given field (e.g. make new discoveries in the field of quantum mechanics).

The reason for the seemingly arbitrary examples above is for exactly the point that they are arbitrary; nobody (or at least very few) people predicted the emergent abilities of models post-GPT-3, and it is unlikely that we will be able to predict the emergent abilities of GPT-5 and beyond. For this reason, we remain positive & excited about pushing forward the state of the art as it relates to LLMs - be that through continuing to push transformers until we see their scaling limits, new model architectures, novel datasets, or many other approaches.